Written by

30th July 2020 – Written by Simon Harris, Ultrasound is generally perceived as an essential diagnostic tool, and it is widely used in many clinical applications. With the use of ultrasound spreading quickly to point-of-care applications, such as emergency medicine, intensive care, anaesthesia, and more, it is becoming ubiquitous throughout healthcare systems.

Despite the strong demand for ultrasound systems, ultrasound has its limitations. Unlike other imaging modalities, ultrasound image capture is very user-dependent, and it takes time and experience in order to get optimal results. Even for experienced users, a significant proportion of images are not taken properly, and particularly in point-of-care ultrasound (POCUS), many users are struggling to capture good quality ultrasound images. The other challenge of using ultrasound is that images are often hard and time consuming to interpret. Many users, even experts, struggle to interpret them in an objective and quantifiable way. These challenges, in turn, create medical errors, increased cost due to longer hospitalisation times and lost reimbursement.

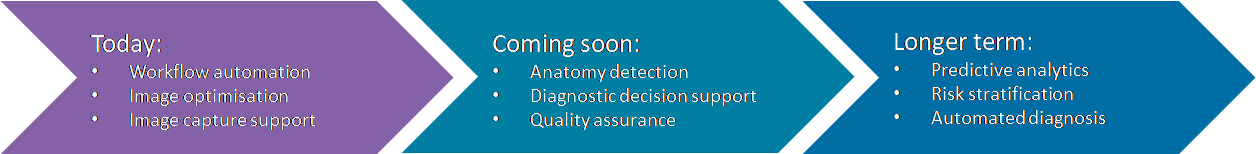

Artificial intelligence (AI) will have a transformative impact on the ultrasound market in the coming years by addressing these inherent limitations and by providing support for image capture, workflow automation and image interpretation.

Image capture assistance

AI-enabled image capture assistance provides real-time support during image acquisition on how to position the probe to obtain the best image quality, alongside automated anatomy navigation to help users to identify specific body parts. Examples include image capture assistance for apical and parasternal cardiology views, gallbladder and lung ultrasound, where users often struggle to get acceptable views to support the diagnosis.

Image capture assistance makes ultrasound easier to use, particularly for physicians with limited ultrasound training, for example primary care physicians, point-of-care specialists, and physicians across all clinical segments in developing countries. Many of these new users will not reach a high level of expertise as ultrasound will only ever be a small portion of their clinical workflow, so image capture assistance will become an essential tool for using ultrasound.

Ultrasound has already made a tremendous positive impact on vascular access and anaesthesia by providing physicians with real-time visualisation. AI can build on this by automatically locating the target and by guiding the clinician during the procedure. One of the main use-cases is expected to be peripheral nerve block (PNB) procedures, which require knowledge of the anatomy involved in various nerve blocks and the correct technique to ensure the procedure is performed correctly, avoiding incidental puncture of blood vessels and damage to the nerves. AI will provide additional navigation support, particularly for physicians with less experience, leading to the increased use of ultrasound-guided regional anaesthesia as an alternative to general anaesthesia.

AI will also play a key role in standardising image acquisition and address the limitations of user variability. For example, by selecting the best quality image for feature quantification. Quality assurance is a concern with the growing use of handheld ultrasound by inexperienced users and AI may help to address this issue.

Diagnostic decision support

As well as image capture assistance, AI is playing an increasingly important role in image interpretation by providing clinicians with diagnostic decision support. For example, the increasing use of automated quantification, such as ejection fraction assessment, provides clinicians with accurate and repeatable data to better inform diagnostic decision making and treatment planning.

For each exam type (body area) there are multiple possible conditions/pathologies and hence clinicians will require multiple AI tools to aid with diagnostic decision making. As an example, for cardiac exams users may require automated ejection fraction, segmental wall motion, wall strain measurement, etc. Furthermore, the mix of ultrasound exams and ultrasound-guided procedures varies depending on the clinician’s specialty and care setting. For example, general practitioners may require AI tools to support with the diagnosis of abdominal pain, respiratory problems and shoulder issues, while emergency medicine physicians typically use ultrasound to diagnose a broader range of clinical conditions, with cardiology and lungs being a large part of their usage. Ultimately, each user group will require an ‚ÄòAI toolkit’ comprising several AI applications for use across various anatomical areas (heart, lung, genitourinary, etc.).

The ultrasound industry is in the early stages of applying AI and today most vendors offer a limited number of point solutions for specific tasks. However, most of the major ultrasound vendors are investing in AI and there are around 20 to 25 independent software vendors (ISVs) developing AI solutions for ultrasound. Product availability is expected to increase manifold in the coming years, making the concept of an ultrasound AI toolkit a reality.

Breaking down the barriers

Although ultrasound has many advantages, the main barrier to its more widespread adoption is the skills shortage. To use ultrasound effectively, clinicians need many years of training and experience. Even then, the large intra- and inter-observer variability makes it hard to get objective and repeatable results in ultrasound analysis. This problem is further compounded by new users of ultrasound in point-of-care and primary care settings, who are typically non-experts in ultrasound. The introduction of AI is breaking down the barriers for the more widespread use of ultrasound, both with experienced and new users, by making the devices easier to use during image capture and by automating complex and time-consuming tasks during image analysis.